Missing Font Information in PDFs: How Metadata Affects Text Optimization

This document is the second in a series of white papers that will explore the problem of missing font information in PDFs. Since its inception, the PDF has revolutionized the way individuals and businesses communicate and exchange information. The promise to maintain informational integrity and display content consistently across different platforms secured the PDF’s position as a leader in document exchange. Yet despite its innovations, the PDF’s own evolution would also bring with it, new challenges.

Missing PDF Fonts: Who Does It Affect and Why is it Important?

At first glance, missing font information may appear trivial. After all, who hasn’t experienced unintelligible characters while scrolling through a PDF? However, this problem is more than just improperly rendered text on a screen. For developers and IT managers, missing font information is problematic as it can delay software development and hinder the production cycle. For end‐users, it translates into lost time and compromised deadlines when they cannot display, print, or edit content properly.

The inconvenience of missing font information affects more than disgruntled individuals in the work place, it also undermines a document’s accuracy and its value as a product. The original purpose of the portable document format was to ensure content integrity and display consistency, but what happens when content is incomplete, cannot print accurately, or can even change?

The issue of improper text rendering is an ironic side‐effect of the PDF’s popularity. After all, the format’s portability is the cornerstone of its purpose. And although developers will always design PDFs to accommodate as many configurations as possible, ultimately, they will never be able to fully accommodate how users choose to work with the portable document format.

Missing Metadata and the User Experience

The portable document format is a complex technology and there are many internal variables that, if left unchecked, can compromise its final output. The following sections will provide a brief overview of one of the many problems that can occur when development corners are cut, specifically – the problem of missing or incorrect metadata.

When metadata goes missing or is incorrect (whether through corruption or developmental oversight), viewers cannot optimize the contents of the document, which means that the PDF cannot guarantee optimal usability within the context in which it is being used. For example, if a user is unable to display or print the contents of a document accurately, the usability of the PDF is not 100% reliable. Likewise, if a user is unable to search a document for a word or extract content, the PDF has also failed to provide optimal usability.

The term “context” is important because it is closely tied to the user experience and often, a positive or negative user experience influences product or vendor perception. Therefore, PDFs that cannot be properly optimized have the potential (if it happens often enough) to directly affect product perception. Unfortunately, many PDF developers are unaware that their PDFs are internally unsound (as in the case of missing or incorrect font information), and their work goes unchecked, only to fall under the scrutiny of disgruntled end‐users.

If metadata information goes missing, a PDF viewer can experience text rendering problems such as missing or unintelligible characters and a slow refresh rate. Within certain contexts, these problems are more apparent (and frustrating) than others. Take for example, working with documents remotely. Before the advent of thin client environments such as terminal servers, users worked with PDF documents locally, using a viewing application such as Adobe Reader.

Consequently, as the popularity of remote access grew, so did the expectation of working remotely with PDFs while maintaining the same real‐time functionalities. However PDF rendering issues are increased under these remote environments. The lack of quality and detail of poorly optimized text is more apparent, as is the document’s slower refresh rate.

Text Rendering on Screen

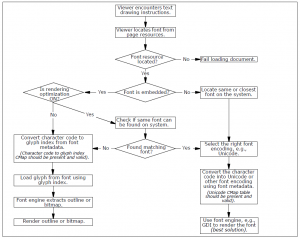

The process that a viewer or rendering application undergoes to display text instructions into meaningful glyphs on screen is complex. Figure 1 provides a simplified overview of this process; however it is important to note that it is during this process that missing metadata takes its toll. The following sections will outline some of different scenarios that might occur when font information goes missing.

Figure 1: Overview of the Text Rendering Process For On Screen Display (Click image to enlarge)

Missing Font Resources

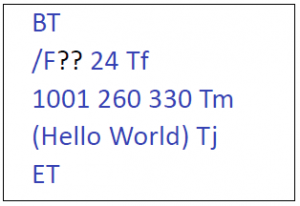

When a PDF viewer encounters a text drawing instruction, it loads a specific font from the font resource. If this resource is missing (Figure 2), the viewer is unable to display any character(s) that uses the specified font and is also unable to provide a substitute for it. Most often the viewer will simply fail to load the document. In some cases the viewer may randomly substitute the missing fonts, but most times it produces unpredictable text rendering results.

Figure 2: Missing Font Resource

Missing Font Family Name

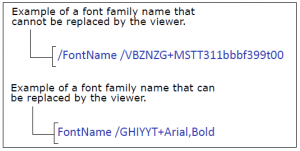

Another instance where font information can go missing is the font family name. For example, if the font family name “Arial” is missing (Figure 3), the viewer is even unable to determine an equivalent system font to use as a replacement. As a result, the viewer is unable to optimize the loading and rendering of the PDF file.

Figure 3: Missing Font Family Name (Click image to enlarge)

Character Codes to Glyphs: The CMap Leads the Way

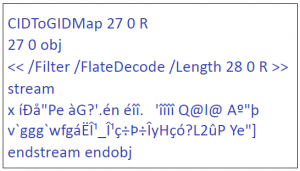

However, if the font resource is found, the viewer processes the information and depending on whether the fonts in the PDF are embedded or not, takes a number of different text decoding methods. If the font is embedded and the viewer is not set to optimize the rendering of text, the viewer will refer to the embedded (CID to Glyph) CMap in the PDF for information on how the font engine can convert the text into glyphs.

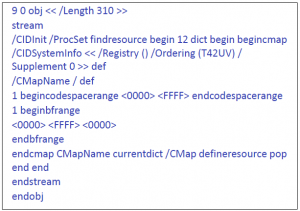

Essentially, the CMap is metadata (Figure 4) that maps character codes to their corresponding graphical representations (glyphs) in order for the font engine to render all the details of each character. However, if there is information missing in the CMap, the font engine is unable to accurately render the characters and the text is unrecognizable.

Figure 4: CID to Glyph CMap

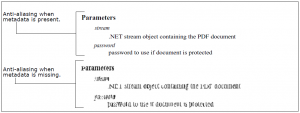

In this situation, anti‐aliasing is unable to function using Windows GDI. For thin client services such as remote terminals, PDAs, and virtualization environments, the absence of anti‐aliasing dramatically affects text processing speed and display quality (Figure 5).

Figure 5: Anti‐aliasing experienced with thin client services (Click image to enlarge)

Viewer Rendering Options

If the font is not embedded, the viewer will look to the system to find a substitute or replacement font. In most cases, even though the binary information that makes up the font file is missing, its corresponding positional and descriptive metadata is sufficient to enable the viewer to compensate by substituting the font.

If a matching font is found on the system, the viewer proceeds to use the services of a font engine (such as GDI, FreeType, or commercial library) to render the final output to the screen. But what if the system fonts are not found or the viewer is not supplied with its own standard list of replacement fonts? The viewer will have to select the closest font replacement instead and fall back on the drawing parameters provided in the PDF’s metadata.

Without these parameters, the viewer is unable to provide a font engine with the information required to draw the glyph(s). If all the information in the font metadata is valid and well‐structured, the viewer is able to load the appropriate glyphs and the font engine can render the final output.

Character Codes to Unicode: Different Metadata, Same Result

As we have seen in the previous section, should there be missing information in the CMap, rendering problems occur yet again. The next question is then, what about the CID to Unicode CMap? What happens in the event that there is information missing there also? Just like its embedded counter‐part, the Unicode CMap provides character to Unicode mapping information (Figure 6). This includes character encoding parameters such as WinAnsi, MacRoman, and Unicode. And just as with the (CID to Glyph) CMap, if there is information missing in the Unicode CMap, the font engine is once again unable to draw the appropriate glyphs and the user can expect more of the same unpredictable text rendering results.

Figure 6: CID to Unicode CMap

Incorrect Metadata

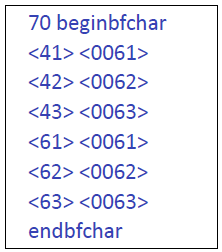

By contrast, incorrect metadata presents a different set of problems. Because the information is incorrect, the resulting text may (in extreme case) display incorrectly, as when a Unicode CMap table contains incomplete or wrong entries. In Figure 7, both lower‐ and upper‐case characters are pointing to the same Unicode values. As a result, the viewer may display the same characters for lower‐ and upper‐case letters.

Figure 7: Unicode CMap Errors

Is There Blame to Place?

Most of the aforementioned problems occur even before the PDF reaches the viewing application. The causes? Often, weak document design and poor development practices are the main culprits behind many of the missing or incorrect metadata problems found in PDFs circulating today. For example, some developers choose not to embed vital font information if these make the file too large or if the data is not required by the PDF specifications.

Missing or incorrect metadata is a commonly overlooked problem because its effects are often only noticeable away from the familiar development environment. Lack of testing and the assumption that “if a PDF renders properly in Acrobat, it will render the same elsewhere” create problematic documents not only for end‐users, but also for the vendors that generate them.

So Many Branches to Prune – Starting at the Root: Where to Begin Tackling the Problem of Incorrect Metadata and Font Information

Where does one begin tackling the problem of incorrect metadata and font information? Since PDF development and production is always changing and growing, where is the starting point? What type of development tool or best practices should developers think about and why?

First and foremost, at the production level, developers need to use the right software tools. With the right tools, developers can start generating well‐structured PDFs that will optimize and render properly. Not only are these good documents appreciated by end-users, but other developers who need to work with them later in different environments also benefit. For example, some tools used by developers tend to remove some TrueType tables because according to the PDF specifications, they are not needed by Acrobat. However, these tables could be required for other purposes such as rendering PDFs on thin clients and PDAs, or exporting document content to other formats such as XPS or XAML.

Yet, using the right tools is often not enough. Because PDF is a complex technology, developers need to think “outside the box” especially regarding the PDF specifications. Many items are not included or mentioned in the PDF specifications, yet these are part of the solution when trying to create well‐structured and optimized documents. The following sections outline some of the best practices (based on years of working with countless problematic and optimized PDF documents) that Amyuni Technologies believes leads to better quality PDFs.

Solid Tables Mean Solid Font Files

Developers should ensure that embedded font files contain all of their respective tables. This way the font file is valid not only from a PDF standpoint, but also for other tools or font engines that might be required to process the document.

Include Valid Metadata

Developers should ensure that the font metadata contains a valid font family name, either through the FontName attribute or the FamilyName attribute. Using cryptic names such as “/F1234,Bold” to represent /Arial,Bold is permitted by PDF specifications, but prevents the viewer from doing any optimization, since the viewer will not recognize “/F1234”as a valid font family name. Developers also need to make sure that all metadata values reflect the actual value(s) of the font file, even if these values seem unimportant. A common example is setting an incorrect value for the AvgWidth which has no (immediate) visual effect until a viewer attempts to optimize the viewing of the PDF.

Font Duplication

Optimizing PDF files so that they do not contain multiple instances of the same font is also important. Developers frequently encounter PDFs that contain one instance of a specific font per page. Font duplication not only hinders optimization but it also increases file size and slows down document processing. True, it is easier to generate a PDF that contains multiple instances of a font, but then it becomes much more complicated to make sure those duplicate fonts are removed before saving the document afterwards.

Tools and Alternatives

PDF is not a new technology, yet there are constantly new PDF tools to work with. These include free online PDF conversion services, application plug‐ins, and popular open source tools. How is one to choose which tools offer the quality and optimization output most appropriate for the task at hand?

To many, PDF is simply the final output of work document. To others, (especially within development environments) a PDF is a document format that may be integrated into a larger, more complex series of tasks. For instance, some applications process large numbers of individual PDF files, remove specific or sensitive metadata from them and recreate single PDF documents.

By contrast, other applications take single large PDF files, and recreate hundreds (or more) of individual documents. In both cases, such applications routinely process PDF files that come from different producers, differ in (internal) structure, contain errors, or have vital information missing. It is these demanding PDF processing tasks (often in large corporate environments) in which the absence of the right PDF tools (or their customizations) and development experience, that lead to problems.

An example of a tool that was designed to operate within the confines of demanding PDF processing is the Amyuni PDF Converter. Aware early on of the technological and development directions that PDF was heading, the PDF Converter was designed to reflect and accommodate the ever‐changing PDF landscape. From the start, it provided what developers expected from a conversion tool – documents that rendered and optimized predictably, regardless of the environment output.

A Needle in the PDF Haystack

In addition to missing metadata, the inability to know why or where the internal structure of a PDF has gone wrong slows down development cycles, not to mention increases technical support costs later on. An example of a tool designed to explore these problems is the Amyuni PDF Analyzer. It was designed with the developer in mind, who needs to know if a document complies with minimum font specifications that are needed to optimize font rendering. Its ability to scrutinize the many PDF objects means developers have a better understanding of the inner workings of their documents and ultimately – give them greater control over how their documents are processed and optimized.

Learning From the Evolution of PDF

Having been involved with the portable document format almost from the beginning, developers at Amyuni Technologies have had the opportunity to experience and troubleshoot a multitude of PDF development scenarios. The results are PDF tools that produce documents that remove and do not include duplicate fonts, to make PDFs smaller and faster to process.

Because the best practices discussed earlier are integrated into Amyuni products, documents are already well‐structured. The well‐structured fonts allow any viewer to optimize the rendering and display the document seamlessly, whether from a desktop or a remote connection. If a font that is in the PDF is missing from the system, a viewer such as the Amyuni PDF Creator can easily find a substitute font. The result: a PDF that renders as it should on different platforms with an almost indistinguishable accuracy from the original document.

Applications

Pruning the tree of problematic PDFs is one approach to fixing missing or incorrect metadata and this is simply the reality of software development. However, it has always been Amyuni’s approach to avoid potential metadata‐related problems (by combining the right tools and best practices) straight at the root before they can arise. Why? Because the PDF is expected to do more than it did 15 years ago. For example PDFs are expected to:

- Display in numerous applications and viewers other than Adobe Acrobat.

- Become archived in various media formats, such as XML, XAML, databases, etc.

- Be accessed and processed using different tools and platforms.

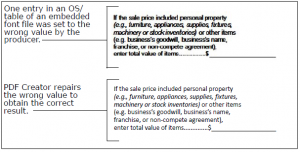

Of course no development environment can ever predict or avoid every possible PDF scenario. Developers are often left having to fix some of the problems discussed in this paper and again, the choice of tools can lead to different results–some not always apparent until later on. A tool like the Amyuni PDF Creator is another example. Positioned to enable developers to optimize documents, the PDF Creator can:

- Fix a font file that was not properly embedded by a third‐party tool or fix errors in its table (Figure 8).

- Detect and remove duplicate font entries in a PDF.

- Ensure that all font file tables and font metadata are accurate.

Figure 8: Font Error and Repair (Click image to enlarge)

As we have seen, achieving optimal PDF results can be a daunting affair. Missing or erroneous metadata is just one of several scenarios that can undermine the user experience and integrity of a PDF document. The nuances inherent in a PDF document are not always obvious until it’s too late.

Conclusion

Inaccurate or ineligible characters may be negligible to some in an office memo, but when PDF documents are the cornerstone of medical records, insurance policies, or judicial statements, there is no room for inaccuracies or difficult legibility. Their content must contain clarity and undisputable accuracy. The same expectations are also warranted in PDF application environments that rely on the timely and efficient processing of documents to avoid software crashes and production interruptions.

As our reliance on PDF continues to grow, so does our dependence. The recent emergence (and importance) of PDF/A as a standard is a testament to how seriously document consortiums view the integrity of PDF content. Although new document formats poise themselves as potential alternatives to the portable document format, it’s up to PDF developers and vendors to continue to push for better and more efficient methods of improving a technology we sometimes take for granted.

| Franc Gagnon is the technical copywriter for Amyuni Technologies www.amyuni.com |

© Amyuni Technologies Inc. All rights reserved. All trademarks are property of their respective owners.

Comment